The WURFL is an “ambitious” configuration file that contains info about all known Wireless devices on earth. Of course, new devices are created and released at all times. While this configuration file is bound to be out of date one day after each update, chances are that the WURFL lists all of the WAP devices you can purchase in the nearest shops.Example applications of Tera-WURFL include:

- Redirecting mobile visitors to the mobile version of your site

- Detecting the type of streaming video that a visiting mobile device supports

- Detecting iPhone, iPod and iPad visitors and delivering them an iPhone-friendly version of your site

- Used in conjunction with WALL4PHP or HAWHAW to create a website in an abstract language which is delivered to the visiting user in CHTML, XHTML, XHTML-MP, or WML, based on the mobile browser’s support

- Detecting ringtone download support and supported ringtone formats

- Detecting the screen resolution of the device and resizing images and wallpapers/backgrounds to an appropriate size for the device.

- Detecting support for Javascript, Java/J2ME and Flash Lite

The author of Tera-WURFL is Steve Kamerman, a professional PHP Programmer, MySQL DBA, Flash/Flex Actionscript Developer, Linux Administrator, IT Manager and part-time American Soldier. This project was originally sponsored by Tera Technologies and was developed as an internal project used for delivering content to customers of the mobile ringtone and image creation site Tera-Tones.com.

What Makes Tera-WURFL Different?

There are many good mobile device detection systems out there, some are free, and some are paid; however, they each have a specific focus. The official WURFL API (avaliable in PHP, Java and .NET coming soon) is a creation of one of the founders of WURFL, Luca Passani. Luca is the driving force behind mobile device detection and is highly regarded in the mobile development community. His APIs are focused on accurate detection of mobile device capabilities. Tera-WURFL’s focus is high-performance detection of mobile devices. Here’s what that means to you.Tera-WURFL Project Priorities

- High Performance: Tera-WURFL uses a MySQL backend (MSSQL is experimental) to store the WURFL data and cache the results of device detections. This database can be shared between many installations of Tera-WURFL, so all your sites can benefit from sharing the same cache. Non-cached lookups on my test system average about 250 devices per second, with cached detections over 1000 per second.

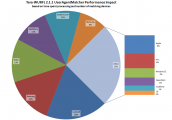

- Accurate Detection of Mobile Devices: By using over a dozen UserAgentMatchers, specifically tailored to their own group of mobile devices, Tera-WURFL is constantly tweaked and tuned to provide more accurate results, detecting over 99% of visiting devices.

- Fast Detection of Desktop vs. Mobile Devices: Although detection of mobile devices is good among most of the libraries, they are not very good at desktop browsers. As a result, your desktop users are detected as mobile devices and sent to the wrong site. Tera-WURFL includes a feature called the SimpleDesktop Matching Engine that is used to differentiate between desktop and mobile browsers at an extremely high rate. Additionally, instead of the tens of thousands of unique desktop user agents piling up in your cache, SimpleDesktop uses just one entry in the cache to represent all the desktop browsers.

- Usability: Since PHP has such a large base in the small- to medium-sized website arena, there are a large number of new developers. Tera-WURFL has been designed to be easy to use and administer. Once the initial configuration is finished, you can maintain the system completely from its Web Administration Page, including the ability to update the WURFL data via the Internet.

Constant Performance and Accuracy Improvements

Using our in-house analysis and regression testing software built for Tera-WURFL, we are able to quickly perform a deep analysis on the performance and accuracy of both the UserAgentMatchers and the core. This data is then evaluated to identify potential bottlenecks in the system. We are also able to track the internal match confidence that Tera-WURFL has with each device detection and construct aggregate visualizations to determine if there are more new user agents on the Internet that are slipping by the detection system.Requirements

- Hardware: Architecture Independent

- Software

- Web Server: Any webserver that supports PHP and the MySQLi extension, here are some I’ve tested:

- Apache 2.x

- IIS 6/7 (for Windows users I recommend WampServer or XAMPP

- lighttpd

- PHP

- PHP 5.x with the following required modules:

- MySQLi (MySQL improved extension)

- ZipArchive (this package is included with PHP >= 5.2.0. For previous versions of PHP it is available from PECL.

- PHP 5.x with the following required modules:

- Database: One of the following database servers:

- MySQL >= 4.1

- MySQL 5.x

- Microsoft SQL Server 2005/2008 (EXPERIMENTAL)

- Web Server: Any webserver that supports PHP and the MySQLi extension, here are some I’ve tested:

How does it work?

When a web browser (mobile or non-mobile) visits your site, it sends a User Agent along with the request for your page. The user agent contains information about the type of device and browser that is being used; unfortunately, this information is very limited and often times is not representative of the actual device. The WURFL Project collects these user agents and puts them into an XML file, commonly referred to as the WURFL File. This file also contains detailed information about each device i.e. the screen resolution, audio playback capabilities, streaming video capabilities, J2ME support and so on. This data is constantly updated by WURFL contributors from around the world via the WURFL Device Database. Tera-WURFL takes the data from this WURFL file and puts it into a MySQL database (MSSQL support is experimental) for faster access, and determines which device is the most similar to the one that’s requesting your content. The library the returns the capabilities associated with that device to your scripts via a PHP Associative Array. Currently, the WURFL contains 29 groups of capabilities with a total of 531 capabilities.Here’s the logical flow of a typical request:

Device Requests a Page

Someone requests one of your pages from their mobile device. Their User Agent is passed to the Tera-WURFL library for evaluation.Request is Evaluated

Tera-WURFL takes the requestor’s user agent and puts it through a filter to determine which UserAgentMatcher to use on it. Each UserAgentMatcher is specifically designed to best match the device from a group of similar devices using Reduction in String and/or the Levenshtein Distance algorithm.Capabilities Array is Built

Each device in the WURFL file and WURFL database falls back onto another device, for example the iPhone 3GS has only a handful of capabilities, then it falls back onto the iPhone 3G, which adds to those capabilities and falls back onto the original iPhone, then onto a generic device that contains the default capabilities. Through this method of inheritance, the device entries remain very small in size. In our example, once the User Agent has been matched, the capabilities from this device are stored into the capabilities array, then the next device in its fallback tree (its parent device) is looked up, and its capabilities are add, all the way up to the most generic device.Results are Cached

The capabilities array is now cached with the User Agent so the next time the device visits the site it will be detected extremely quickly.Capabilities are Available to the Server

The process is finished and the capabilities are now available for use in your scripts. One common use, for example, is to redirect mobile devices to a mobile version of the site:<?php

require_once './TeraWurfl.php';

$wurflObj = new TeraWurfl();

$wurflObj->getDeviceCapabilitiesFromAgent();

// see if this client is on a wireless device

if($wurflObj->getDeviceCapability("is_wireless_device")){

header("Location: http://yourwebsite.mobi/");

}

?>Show a Picture of the Device (optional)

If you have version 2.1.2 or higher, you can show an image of the device (assuming one is available). See the Device Image page for the details.Here’s a usage example:

<?php

require_once 'TeraWurfl.php';

require_once 'TeraWurflUtils/TeraWurflDeviceImage.php';

$wurflObj = new TeraWurfl();

$wurflObj->getDeviceCapabilitiesFromAgent();

$image = new TeraWurflDeviceImage($wurflObj);

/**

* The location of the device images as they are accessed on the Internet

* (ex. '/device_pix/', 'http://www.mydomain.com/pictures/')

* The filename of the image will be appended to this base URL

*/

$image->setBaseURL('/device_pix/');

/**

* The location of the device images on the local filesystem

*/

$image->setImagesDirectory('/var/www/device_pix/');

/**

* Get the source URL of the image (ex. '/device_pix/blackberry8310_ver1.gif')

*/

$image_src = $image->getImage();

if($image_src){

// If and image exists, show it

$image_html = sprintf('<img src="%s" border="0"/>',$image_src);

echo $image_html;

}else{

// If an image is not available, show a message

echo "No image available";

}

?>

, “n” being the number of events in the queue.

, “n” being the number of events in the queue.